-

Friday

3 Jul 2020

8:00 am - 9:00 am

Event Time:

Friday, 3 July @ 0800 (AEST) (Melbourne)

Friday, 3 July @ 0600 (CST) (Beijing, China)

Friday, 3 July @ 0330 (IST) (New Delhi, India)

Thursday, 2 July @ 1500 (PDT) (Berkeley)

Thursday, 2 July @ 1800 (EDT) (New York)

Thursday, 2 July @ 2300 (BST) (London)

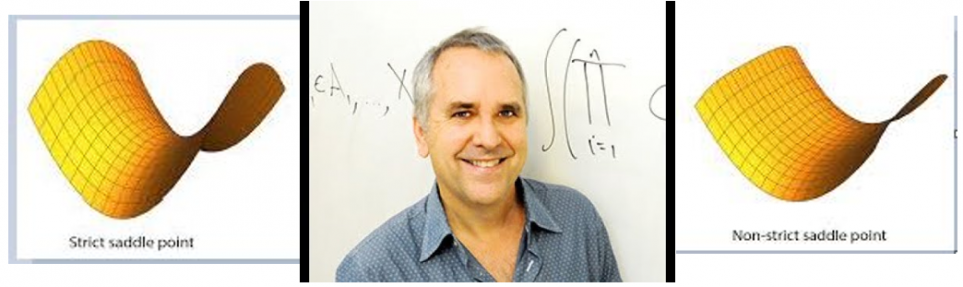

Presenter: Professor Michael I. Jordan, University of California, Berkeley

Biography: UC Berkeley & Wikipedia

Michael is a professor of mathematics at the University of California, Berkeley (UCB) and researcher in machine learning, statistics, and artificial intelligence. He is one of the leading figures in machine learning, and in 2016 Science reported him as the world’s most influential computer scientist.

(Source: Wikipedia)

Topic: Optimization with Momentum: Variational, Hamiltonian, and Symplectic Perspectives

Abstract: Many new mathematical challenges have arisen in the area of gradient-based optimization for large-scale statistical data analysis. I will argue that significant insight can be obtained by taking a continuous-time, variational perspective on optimization. Essentially we can obtain insight by formulating the question of the “optimal way to optimize”. Using a (dissipative) Hamiltonian formulation of the continuous-time dynamics, together with a particular discretization, I show how to obtain algorithms that preserve the favorable properties of the continuous-time dynamics. Moreover, I show how to obtain rate-matching lower bounds in continuous time. I’ll discuss both convex and nonconvex problems, and, time permitting, stochastic counterparts based on Langevin diffusions.

Structure: 45 minutes seminar with 15 minutes question time

Seminar Recording & Slides:

Please click here for the recording of the webinar.

Please click here for the presentation slides.